How to Achieve Style Transfer in Stable Diffusion

Updated on

SD (Stable Diffusion) is currently the most popular locally deployed AIGC tool, and it can be described as both simple and complex. It is simple because users with no drawing skills at all can create beautiful images in various styles. However, it is complex because AIGC is relatively less "obedient," making it quite challenging to get the AI to fully realize content according to one's specific vision.

In addition, in SD, there are a diversity of styles available for you to choose from when it comes to image to image pattern. That's relevant to what we're talking about here – Style Transfer. Well, questions come up. What is style transfer in Stable Diffusion? And how to achieve style transfer in SD?

Of course, if you have no prior experience, this article will provide you with detailed explanations of several commonly used pre-processors in Stable Diffusion, as well as how to switch styles to better serve your artistic creations.

What is Style Transfer in Stable Diffusion?

Style and composition transfer in Stable Diffusion involves altering an image's appearance and arrangement while preserving its essential elements.

And in Style Transfer, two settings in Stable Diffusion play crucial roles in style transfer in Stable Diffusion. They are IP-Adapter and ControlNet. The IP-Adapter combines characteristics from an image prompt and a text prompt to generate a new, modified image. This image is then integrated with the input image, which has been pre-processed by ControlNet. ControlNet, in conjunction with the IP-Adapter, aids in image prompting within Stable Diffusion. The style transfer process, which can change elements like layout, colors, or faces in the picture, uses one image to influence the look of another.

Upscale and Enhance SD AI-generated Images with AI

- One-stop AI image enhancer, denoiser, deblurer, and upscaler.

- Use deep learning tech to reconstruct images with improved quality.

- Upscale your Stable Diffusion AI artworks to stunning 8K/16K/32K.

- Deliver Hollywood-level resolution without losing quality.

- Friendly to users at all levels, and support both GPU/CPU processing.

How to Achieve Style Transfer in Stable Diffusion?

It would rather demystify the whole process of transferring style via the specific example than the boring yet abstract text explanation. Hence, read on the following example to better get the hang of how to achieve style transfer in Stable Diffusion.

Step 1. Open and access to Stable Diffusion. Afterwards, choose img2img option.

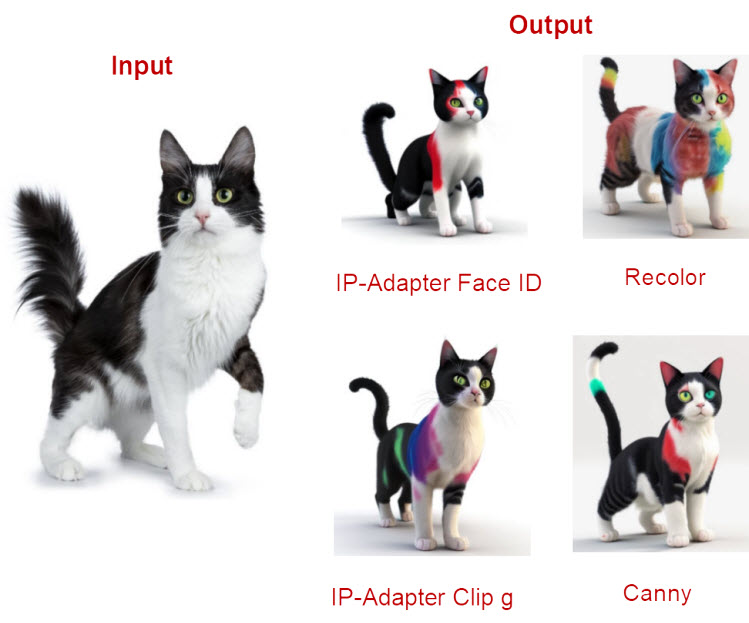

Step 2. Load your original image to Stable Diffusion. Here we load a cat with black & white hair.

Step 3. Enter your prompt text: 3D image of a cute cat covered in colors. Meanwhile, enter the negative prompt if needed.

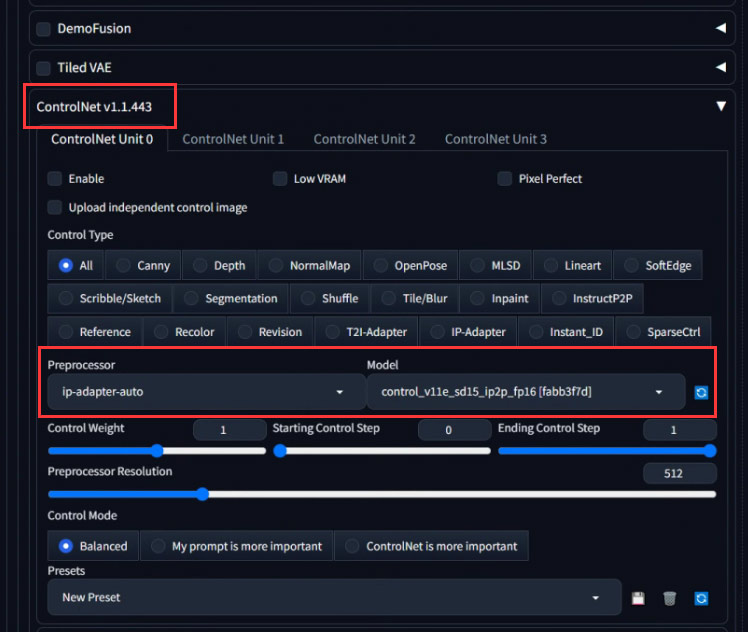

Step 4. Scroll down to the ControlNet section where you can choose the control type, preprocessor and model to achieve style transfer you want.

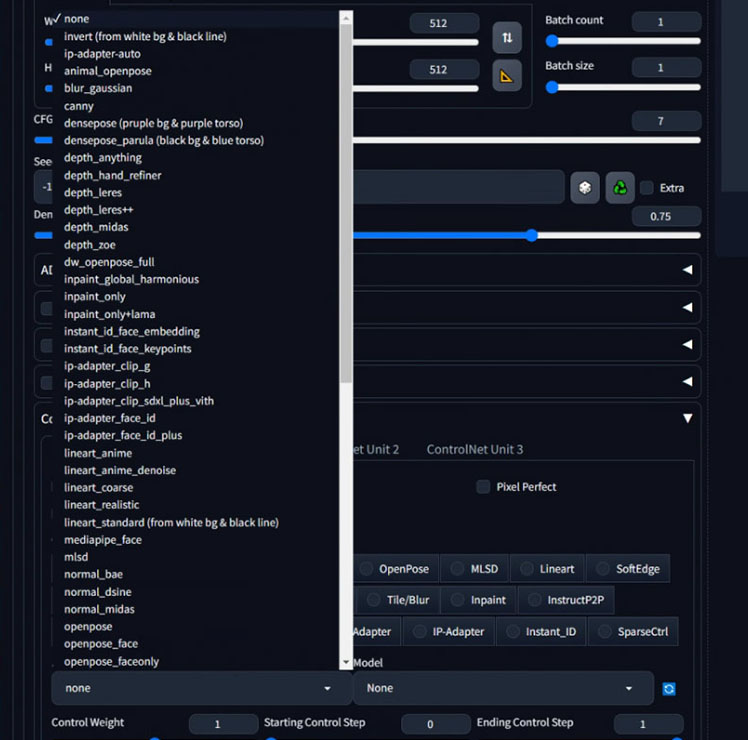

Step 5. Select Preprocessor. As you can see, there are a wide selection of preprocessor models available. The commonly used options include IP-adapter (many variants), inpaint, recolor, canny, depth, lineart, etc. Here we pick up some of them to show you the different style Stable Diffusion generates.

Step 6. Tap the Generate button to generate the AI art based on the cat image you import. Different Preprocessor and Control Type will bring you different outputs. Check the below comparison picture to see the distinction.

Note: if you wanna customize your image with more control, you can try more Preprocessor models and adjust control weight, preprocessor resolution, control mode, presets, etc.

Step 7. Click the Save button (below the output image) to save the generated AI art to the dedicated directory. The output art will be auto saved as PNG format. Tap the blue download link then to download the output AI art to your local drive.

Now the style transfer job is accomplished in Stable Diffusion. You can carve out time to try more preprocessor and generate more AI art in different style.

Stable Diffusion style transfer is here to redefine image manipulation. It's not perfect – replicating the artistry of human hands takes time. But the ability to convincingly paint your photos with styles ranging from Van Gogh's swirls to a hyper realistic dreamscape is nothing short of mind-blowing. While technical hurdles like resource-intensive training and content preservation remain, the future looks bright. With an active developer community and constant advancements, stable diffusion is poised to become a powerful tool for anyone who wants to unleash their inner artist or add a unique twist to their visuals. So, fire up your diffusion models and get ready to experiment – the only limit is your imagination.