What is VAE and How to Use It in Stable Diffusion

Updated on

Stable diffusion is a strong machine-learning technique, particularly for creating high-quality images. The Variational Autoencoder (VAE) is a critical component that improves performance. This article will explain what a VAE is, its role in Stable Diffusion, and how to use it successfully. By the conclusion, you'll have a basic understanding of how to combine VAE with Stable Diffusion to achieve superior results.

A Variational Autoencoder (VAE) is a form of artificial neural network that generates new data identical to the input data. It works by determining the underlying distribution of the data and then creating fresh samples from that distribution. VAEs have two primary components: the encoder and the decoder. The encoder compresses the input data into a latent space representation, and the decoder reconstructs the data from that representation.

In the context of Stable Diffusion, VAEs are critical in improving the quality and diversity of generated images. By learning a compact representation of the data, VAEs help the diffusion process in producing more stable and realistic images. This is especially beneficial in applications such as picture synthesis, denoising, and other generative jobs requiring high-quality outputs.

1. Benefits of Using VAE in Stable Diffusion

Improved Image Generation

Using a VAE in Stable Diffusion considerably increases the quality of the images produced. VAEs learn to capture the key properties of the input data, resulting in more coherent and detailed outputs. This produces photos that are not only visually pleasing but also more realistic.

Enhanced Model Stability

VAEs help to maintain the stability of the diffusion process. They help in noise reduction during image production by providing an organized latent space. This decreases the likelihood of producing distorted or unrealistic visuals, increasing the model's reliability and consistency.

Reduction of Computational Resources

VAEs help to reduce the computing power required for training and picture generation. Because they reduce the data to a smaller, more manageable latent space, the overall computing load is reduced. This speeds up the training process and reduces the amount of resources required, which benefits both research and practical applications.

While VAEs significantly enhance the quality of AI-generated images, there are times when you need to upscale these images to higher resolutions without compromising on quality. This is where Aiarty Image Enhancer comes into play.

Aiarty Image Enhancer is a powerful tool designed specifically for upscaling AI-generated images, including those created with Stable Diffusion. It leverages advanced AI algorithms to preserve the fine details and sharpness of the original image while increasing its resolution. Whether you're looking to print your AI-generated artwork or use it in high-resolution projects, Aiarty ensures that your images remain crisp, clear, and true to the original vision.

Upscale and Enhance SD AI-generated Images with AI

- One-stop AI image enhancer, denoiser, deblurer, and upscaler.

- Use deep learning tech to reconstruct images with improved quality.

- Upscale your Stable Diffusion AI artworks to stunning 8K/16K/32K.

- Deliver Hollywood-level resolution without losing quality.

- Friendly to users at all levels, and support both GPU/CPU processing.

2. How to Use VAE in Stable Diffusion

Step 1: Download the VAE model

Using VAE in Stable Diffusion is a very simple process. First, you need to download the VAE model. Stability AI has two VAE models that you can use.

Here are the download links for both the models:

Stable Diffusion VAE Model (EMA)

Stable Diffusion VAE Model (MSE)

Step 2. Install it

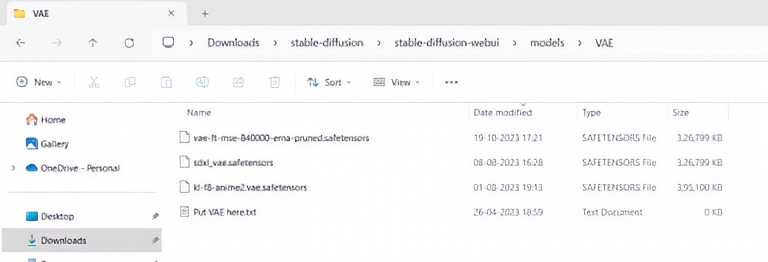

Once downloaded, place the downloaded .safetensor file of the model in the following directory: stable-diffusion-webui/models/VAE

For your convenience, run the following commands in Linux or Mac OS in the stable-diffusion-webui directory to download and install the VAE files:

Wget https://huggingface.co/stabilityai/sd-vae-ft-ema-original/resolve/main/vae-ft-ema-560000-ema-pruned.ckpt -O models/VAE/vae-ft-ema-560000-ema-pruned.ckpt

Wget https://huggingface.co/stabilityai/sd-vae-ft-mse-original/resolve/main/vae-ft-mse-840000-ema-pruned.ckpt -O models/VAE/vae-ft-mse-840000-ema-pruned.ckpt

Step 3. Apply

The Apply settings button will load your VAE model. Now, when you generate images in Stable Diffusion, your chosen VAE model will be used. You do not have to use your VAE every single time.

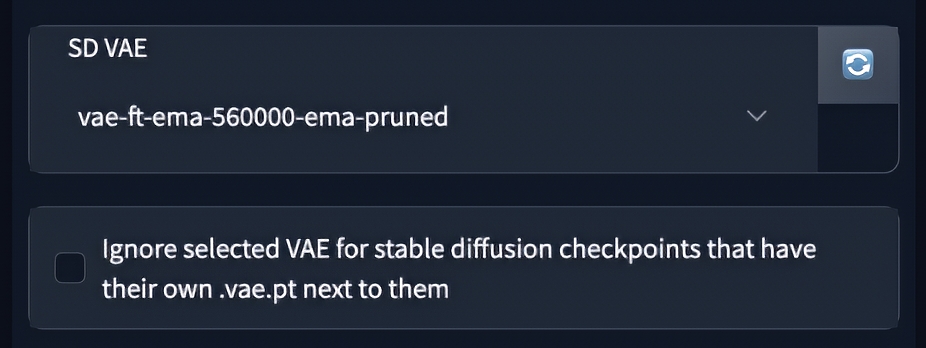

To use the VAE in your AUTOMATIC1111 GUI model, navigate to Settings and then the Stable Diffusion option on the left.

Find the SD VAE section.

Select the VAE file you want to utilize from the selection below.

Now, select the Apply Settings tab at the top, and the settings will be applied successfully after loading.

3. Common Issues and Troubleshooting

Common Errors

- Overfitting: If the model performs well on training data but poorly on validation data, it might be overfitting.

- Vanishing Gradients: If the training loss does not decrease, check for vanishing gradients and adjust the learning rate or model architecture.

Solutions and Best Practices

- Regularization: Use techniques like dropout to prevent overfitting.

- Gradient Clipping: Apply gradient clipping to avoid exploding gradients.

4. FAQs

What is VAE in Stable Diffusion?

A VAE, or Variational Autoencoder, is a neural network that learns to encode input data into a compact latent space and then decode it back to the original form, improving the quality and stability of image generation in Stable Diffusion.

How does VAE improve Stable Diffusion?

VAEs improve Stable Diffusion by providing a structured latent space that enhances the coherence and detail of generated images, while also making the model more stable and efficient.

Can I use pre-trained VAE models in Stable Diffusion?

Yes, pre-trained VAE models can be used to save time and computational resources. Ensure the pre-trained model is compatible with your data and the diffusion process.

What are the best practices for training a VAE model?

Best practices include using a suitable dataset, fine-tuning hyperparameters, applying regularization techniques, and monitoring training performance to avoid overfitting and vanishing gradients.

Conclusion

Using a VAE in Stable Diffusion improves the quality, stability, and efficiency of generated images. By following the techniques indicated in this article, you can develop and integrate VAE into your own applications, resulting in improved picture production performance. Experiment with various settings and datasets to fully realize the potential of VAE in Stable Diffusion.