What is Stable Diffusion Realistic? What are the Best SD Realistic Models?

Updated on

The advent of generative models has unlocked new frontiers in image synthesis. One of the standout technologies leading this charge is Stable Diffusion, a text-to-image diffusion model that has garnered attention for its remarkable ability to produce hyper-realistic images from textual descriptions.

In this article, we dive deep into the mechanics of Stable Diffusion, explore its best models for photorealistic style and walk you through the detailed guide on how to generate realistic people, scenes, objects and more.

What is Stable Diffusion Realistic?

Stable Diffusion is a text-to-image diffusion model capable of generating highly detailed images from textual descriptions. "Realistic" in the context of Stable Diffusion refers to the model's ability to produce images that closely resemble real-life scenes, objects, or people.

This is achieved through advanced training techniques and a large dataset that includes a wide variety of realistic images. The model iteratively refines images from a noisy state to a clearer one, guided by the textual input, resulting in photorealistic outputs.

Upscale and Enhance SD AI-generated Images with AI

- One-stop AI image enhancer, denoiser, deblurer, and upscaler.

- Use deep learning tech to reconstruct images with improved quality.

- Upscale your Stable Diffusion AI artworks to stunning 8K/16K/32K.

- Deliver Hollywood-level resolution without losing quality.

- Friendly to users at all levels, and support both GPU/CPU processing.

What are the Best Models to Generate Realistic Images in Stable Diffusion?

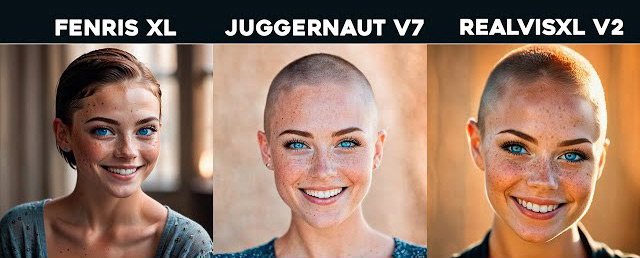

There are a bounty of models available for you to generate photorealistic people, scenes or objects images in Stable Diffusion currently. And different models will bring you different visual effects. Here we introduce the most commonly used realism models for your reference.

Realistic Vision

The groundbreaking Stable Diffusion Model, called Realistic Vision, aims to create visuals that closely resemble reality. It achieves this through a meticulously designed training process that blends the imaginary with the real, resulting in near-photographic accuracy. Due to its powerful capabilities, which span applications from virtual reality to art, Realistic Vision is rapidly transforming the field of generative artificial intelligence.

RealVisXL

RealVisXL is a robust stable diffusion model that excels in producing images with remarkable size, scale, and realism. Unlike earlier models, it is trained on Stable Diffusion's latest SDXL base model, making it significantly more powerful.

Designed to generate realistic images on a large canvas, as well as photorealistic ones, this model can create detailed, lifelike pictures at an unprecedented scale. This capability makes it perfect for applications where precision and size are essential, such as high-definition gaming and architectural visualization. RealVisXL is set to transform photorealistic experiences across various fields.

CyberRealistic

CyberRealistic specializes in generating digitally realistic photorealistic images. By using the Stable Diffusion Model, it adds an extra layer of complexity to virtual landscapes, creating a virtual environment that feels almost tangible. CyberRealistic is highly beneficial for developing realistic, immersive experiences in various applications, such as gaming or augmented reality.

Dreamlike Photoreal

The Dreamlike Photoreal model in Stable Diffusion is a specialized variant of the Stable Diffusion AI model designed to generate highly realistic and photorealistic images from textual descriptions.

Key features of the Dreamlike Photoreal model include High Realism, Text-to-Image Synthesis, Fine-Tuning and Training and applications. It represents an advancement in the capabilities of AI image generation, pushing the boundaries of what is possible with AI-driven photorealism.

URPM

The URPM (Ultra Realistic Portrait Model) in Stable Diffusion is another specialized variant of the Stable Diffusion AI model, designed specifically for generating highly realistic portraits from textual descriptions. This model focuses on creating lifelike human faces with great attention to detail, including skin texture, hair, and facial features.

Overall, the URPM model extends the capabilities of Stable Diffusion by focusing on the generation of realistic human portraits, making it a valuable tool for artists, designers, and developers working with digital human imagery.

How to Generate Photorealistic Images in Stable Diffusion?

Irrespective of generating realistic people, scenes or objects, the general process is much of muchness. Hence, here we just take reslistic people for instance. As for realism scenes or objects, just copy the below operating mentality.

Step 1. Choose a realistic model in Stable Diffusion. Here we choose Stable Diffusion v2.1 as an example.

Step 2. Prepare detailed realism prompts for SD. More details, better results. Consider the following elements when writing your realistic people prompts:

- Physical Appearance: Describe the person's physical traits, including age, gender, ethnicity, hair color, eye color, facial features, and expressions.

- Clothing and Accessories: Specify what the person is wearing, including the style, color, and any accessories like glasses, hats, or jewelry.

- Background and Setting: Mention the environment or setting where the person is located, which can help the model generate contextually accurate images.

- Pose and Activity: Describe the person's pose or what they are doing in the scene, which adds to the realism.

On top of the basic info about people, you need to take the following parameter settings into consideration, as well.

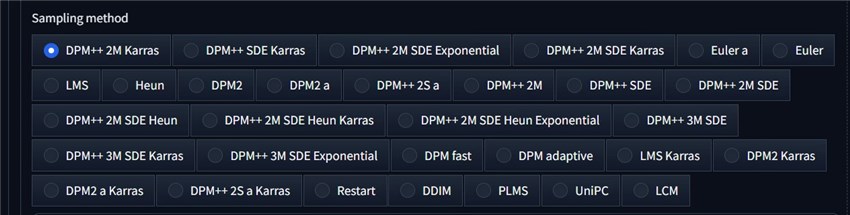

- Sampling method, including DPM++ 2M Karras, DPM++ SDE Karras, Euler/Euler a, LMS, DPM2, DPM++ 3M SDE, etc.

- Lighting. A good photo has interesting lights. The same applies to Stable Diffusion. The commonly used ones are front lighting, back lighting, studio lighting, sunshine, looking at the camera, etc.

- Camera, including DSLR, GoPro, Canon, Panasonic, 8K, ultra quality, sharp focus, depth of field, marco shots, tack sharp, dof, film grain.

- Angle, like low angle, high angle, profile angle, front angle.

Step 3. Blend two names. To generate reslistic people in SD, you can try blending two names to find some inspirations if you have no clue. One trick is to take advantage of celebrities. Their looks are the most recognizable part of their bodies. So they are guaranteed to be consistent. The syntax in AUTOMATIC1111 is [person 1: person2: factor].

factor is a number between 0 and 1. It indicates the fraction of the total number of steps when the keyword switches from person 1 to person 2. For example, [Lily Collins:Emma Watson:0.5] with 20 steps means the prompt uses Lily Collins in steps 1 – 10, and uses Emma Watson in steps 11-20. This is really interesting. You're strongly advised to have a try.

Step 4. Example Prompts. Here are some example prompts to help you generate realistic images of people:

Example 1: Professional Portrait

Prompt: "Photorealistic style. A professional portrait of a middle-aged woman with shoulder-length brown hair and hazel eyes. She is wearing a navy blue blazer over a white blouse, and she has a confident smile. The background is a simple, blurred office setting with soft lighting highlighting her face, front angle."

negative prompt: disfigured, ugly, bad, immature, cartoon, anime, 3d, painting, b&w

Example 2: Casual Scene

Prompt: "A young man in his early twenties, with short black hair and brown eyes, wearing a casual outfit of a red t-shirt and blue jeans. He is sitting on a park bench, holding a coffee cup and looking relaxed. The background shows a sunny park with trees and people walking in the distance, canon camera, profile angle, light and shade contrast."

negative prompt: low quality, fat, CGI cartoon, immature, anime, 3d, painting

Example 3: Family Photo

Prompt: "A happy family of four standing in front of their home. The father, a tall man with short blonde hair, is wearing a plaid shirt and jeans. The mother, a petite woman with long black hair, is wearing a floral dress. Their two children, a boy and a girl, are dressed in matching outfits of blue shorts and white t-shirts. They are all smiling and holding hands. front angle, depth of field"

negative prompt: disfigured, ugly, bad, immature, cartoon, anime, 3d, painting, b&w

Step 5. Fine-Tuning and Post-Processing. Even with detailed prompts, sometimes the generated images might need some adjustments. Here are some tips:

- Iterative Refinement: Generate multiple images and refine your prompts based on the results. Adjust details to get closer to your desired outcome.

- Post-Processing: Use image editing software to make minor adjustments to the generated images. This can include color correction, background modification, or enhancing certain features.

- Combining Outputs: Sometimes, combining elements from multiple generated images can help achieve the desired realism. Use tools like Photoshop to merge different parts of images.

Step 6. Hit Generate to create a realistic people in Stable Diffusion and wait for its final result.

If the output image is too small like only 512x512 pixels, you can use AI image upscaler - Aiarty Image Enhancer to AI upscale Stable Diffusion image from 512p to 2048p or 4096p (up to 8X its original size) for later poster prints, SNS sharing or demonstration. Meanwhile, its built-in Denoise, Deblur, Decompression and More-Detail features jointly will guarantee you a much more crisp and sharp quality.