Midjourney vs. Stable Diffusion: A Comprehensive Guide

Updated on

The ability to generate high-quality images on demand has numerous applications across various industries, from advertising and marketing to entertainment and education. AI image generators have democratized the creation process, empowering individuals with limited artistic skills to bring their visions to life with remarkable detail and creativity.

As these tools continue to evolve and gain widespread adoption, they are reshaping the way we perceive and interact with visual media. However, with their immense potential comes the need for a deeper understanding of their capabilities, limitations, and ethical implications.

In this comprehensive blog post, we will delve into the world of Midjourney and Stable Diffusion, two of the most prominent AI image generators on the market. We will explore their underlying technologies, user experiences, artistic styles, and advanced features, providing a detailed comparison to help you make an informed decision based on your specific needs and requirements.

Here is a draft section explaining how Midjourney and Stable Diffusion work:

How Midjourney and Stable Diffusion Work

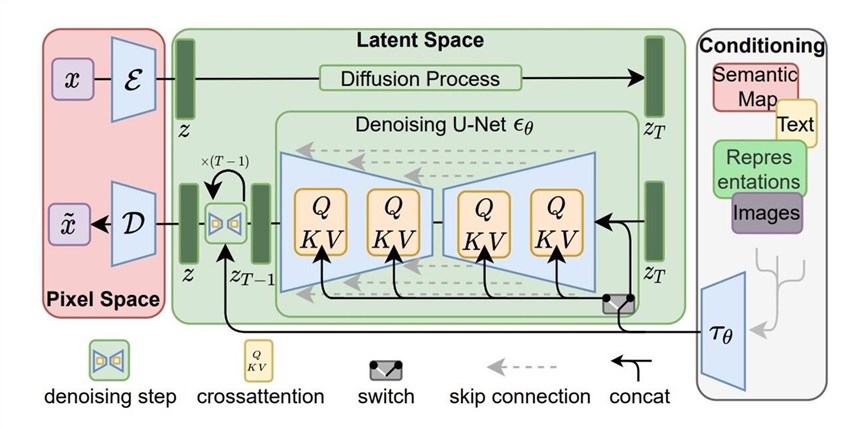

Midjourney and Stable Diffusion both leverage advanced machine learning techniques called diffusion models to generate images from text descriptions. However, there are some key differences in their underlying approaches.

Midjourney utilizes a proprietary AI model that operates as a text-to-image diffusion system. The exact details of its architecture are not publicly known, but it likely combines a large language model for understanding the text prompts with a diffusion model for image generation. Midjourney's model is trained on a curated dataset to produce highly artistic and stylized outputs.

Stable Diffusion, being an open-source model, has more transparency around its inner workings. It employs a latent diffusion model that learns to generate images by reversing a diffusion process applied to an existing image dataset. The model starts with pure noise and iteratively refines it into an image matching the text encoding from a CLIP model trained on 400 million image-text pairs.

Both systems use a similar overall process:

- The text prompt is encoded into a numerical representation using a language model.

- This text encoding conditions the diffusion model to generate an image matching the prompt description.

- The diffusion model iteratively removes noise from an initial random input to produce the final image output over many denoising steps.

While the core techniques are similar, Midjourney and Stable Diffusion likely differ in their model architectures, training data, and fine-tuning approaches, resulting in their distinct output styles and capabilities. Stable Diffusion's open-source nature allows more customization, while Midjourney aims for a refined and user-friendly experience tailored towards artistic image generation.

And these are exactly what we will focus on in the following contents.

Midjourney vs. Stable Diffusion: User Experience and Interfaces

Midjourney and Stable Diffusion offer vastly different user experiences due to their contrasting interface designs and access methods.

Midjourney Interface

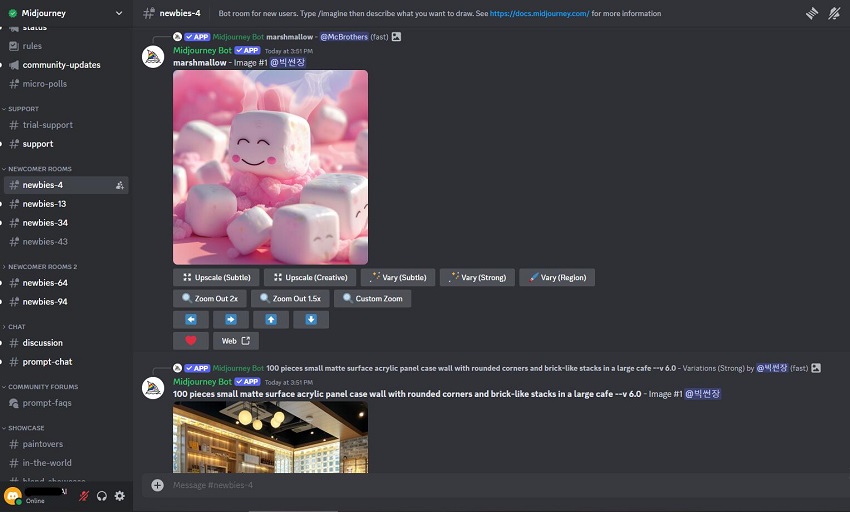

The primary interface for Midjourney is through a Discord chat bot. Users interact by typing text prompts and commands into the Discord channel.

This text-based approach has both advantages and limitations:

Pros:

- Intuitive for those familiar with chat interfaces.

- Allows quick iteration by simply editing and resubmitting prompts.

- Fosters a sense of community through the shared Discord server.

Cons:

- Less visual compared to traditional GUI interfaces.

- Can be cumbersome to type out lengthy or complex prompts.

- Limited customization options beyond the core text prompt.

Overall, Midjourney's Discord interface prioritizes ease of use and a streamlined experience over advanced customization tools.

Stable Diffusion Interface

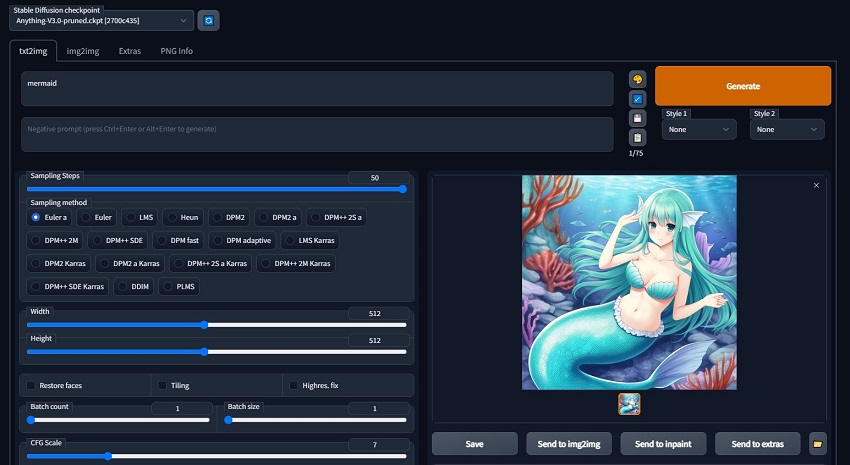

In contrast, Stable Diffusion being an open-source model has multiple interface options developed by the community:

1. Command-line interface: For technical users comfortable with terminal inputs.

2. Web UIs like AUTOMATIC1111: Provide a full graphical interface with sliders, buttons, and advanced options for fine-tuning.

3. Cloud services: Like the Stable Diffusion web UI but hosted remotely, for example, the DreamStudio by Stability AI.

The variety of interfaces caters to different skill levels and preferences:

Pros:

- Web UIs are highly visual and intuitive for beginners.

- Extensive customization for techniques like inpainting, outpainting, upscaling.

- Can run locally on personal hardware for more flexibility.

Cons:

- Steeper learning curve, especially for command-line usage.

- Setup can be technically challenging for non-developers.

- Different interfaces have varying features and compatibility.

While Midjourney offers a cohesive but constrained experience, Stable Diffusion's open nature provides more versatility in interfaces at the cost of added complexity. The choice depends on whether you prioritize ease of use or extensive customization capabilities.

Midjourney vs. Stable Diffusion: Image Quality and Artistic Styles

Both Midjourney and Stable Diffusion are capable of generating high-quality, visually stunning images. However, they excel in different areas when it comes to image quality and artistic styles.

Midjourney's Artistic and Stylized Imagery

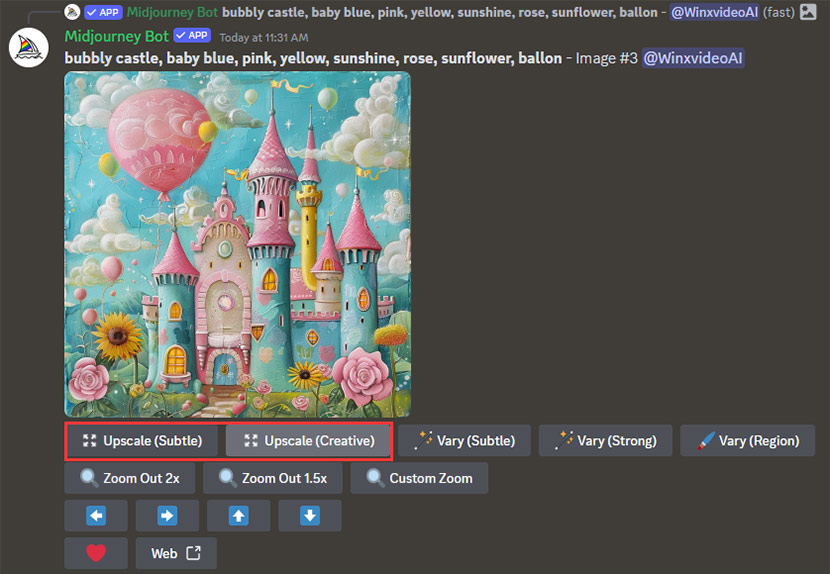

Midjourney is renowned for its ability to produce highly artistic and stylized images with a distinct, almost painterly quality. Its outputs often resemble intricate digital artworks or surreal, abstract compositions. Midjourney excels at rendering textures, lighting, and imaginative concepts with remarkable detail and creativity.

The images generated by Midjourney frequently have a dream-like, ethereal aesthetic that sets them apart from purely photorealistic renderings. This makes Midjourney particularly well-suited for creative projects, concept art, and artistic explorations where a unique, stylized visual expression is desired.

Stable Diffusion's Versatility and Photorealism

While Midjourney shines in artistic and stylized imagery, Stable Diffusion's strength lies in its versatility and ability to generate highly realistic, photographic-quality images across a wide range of styles and subjects.

Stable Diffusion excels at mimicking specific art styles, from photorealism to pixel art, offering users a diverse array of creative possibilities. Its outputs can be incredibly detailed and lifelike, making it a powerful tool for applications that require precise, photorealistic renderings, such as product visualization, architectural renderings, or scientific illustrations.

However, there is a common challenge for both Midjourney and Stable Diffusion, which is achieving high-quality, high-resolution images directly from the generation process. Often, the initial outputs can suffer from noise, lack of sharpness, and lower resolution, which can detract from the overall impact of your artwork.

Aiarty Image Enhancer addresses these pain points by leveraging advanced AI technology to upscale, denoise, and sharpen your images. This software brings out the finest details, enhances textures, and ensures your artwork looks polished and professional. Whether you're looking to refine intricate designs or simply add a professional touch to your images, Aiarty Image Enhancer provides the tools you need for stunning results. Transform your creations effortlessly and see the difference that professional-grade image enhancement can make.

Midjourney vs. Stable Diffusion: Customization and Advanced Features

While both Midjourney and Stable Diffusion offer powerful image generation capabilities, Stable Diffusion stands out for its extensive customization options and advanced features enabled by its open-source nature.

Stable Diffusion's Customization Prowess

One of the key advantages of Stable Diffusion is its ability to leverage custom models trained on specific datasets or art styles. The open-source community has created and shared thousands of custom models, allowing users to generate images tailored to their unique requirements or preferences.

Some notable customization options in Stable Diffusion include:

- Inpainting: Modify or edit specific regions within an existing image while keeping the rest intact.

- Outpainting: Extend the canvas beyond the original image boundaries to create new content.

- Upscalin: Increase the resolution and detail of generated images using specialized upscalers.

- Prompt Engineering: Craft highly specific text prompts to control various aspects of the output.

- LoRA: or Low-Rank Adaptation, is a technique designed to efficiently fine-tune large models, including Stable Diffusion, by significantly reducing the number of parameters that need to be trained.

- Hypernetwork Training: Fine-tune the model on custom datasets for specialized applications.

Additionally, Stable Diffusion can be run locally on personal hardware, granting users complete control over the model and its parameters, enabling advanced techniques like dreambooth fine-tuning for personalized image generation.

Midjourney's User-Friendly Approach

While Midjourney may not offer the same level of granular customization as Stable Diffusion, it provides a more streamlined and user-friendly experience with its own set of advanced features:

- Variations: Generate multiple variations of an image with a single click, allowing for subtle or drastic changes.

- Upscaling: Increase image resolution up to 4X for higher detail and print quality.

- Inpainting/Outpainting: The "Vary" and "Zoom Out" features enable region-specific editing and canvas extension.

- Image Blending: Combine two images into a new, blended composition.

- Aspect Ratio Control: Adjust the aspect ratio of generated images for different formats.

Midjourney's strength lies in its intuitive interface and ease of use, making advanced editing tools accessible to users without extensive technical knowledge.

Midjourney vs. Stable Diffusion: Pricing and Accessibility

One of the key differences between Midjourney and Stable Diffusion lies in their pricing models and accessibility to users.

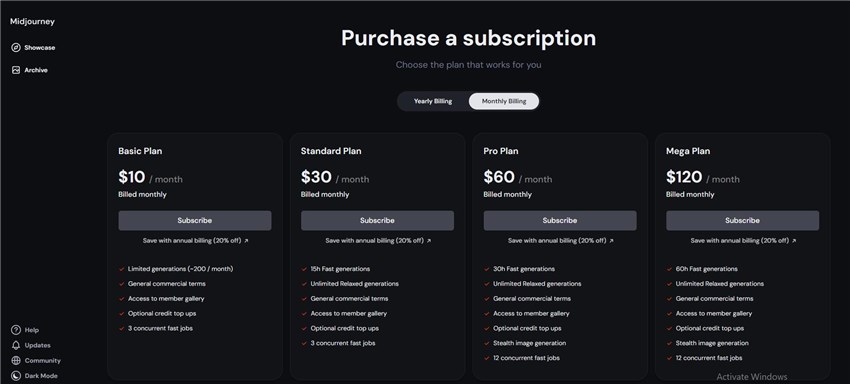

Midjourney operates on a paid subscription basis, with pricing tiers based on the number of GPU hours allotted per month:

- Basic Plan: $10/month for 3.3 GPU hours.

- Standard Plan: $30/month for 15 GPU hours.

- Pro Plan: $60/month for 30 GPU hours.

- Mega Plan: $120/month for 60 GPU hours.

The GPU hours determine how quickly you can generate images, with faster processing times for higher tiers. There is no free tier or trial available for Midjourney due to high demand.

While the subscription model provides a straightforward and user-friendly experience, it does come at a recurring cost, which may be a barrier for some users, especially those with limited budgets or casual usage needs.

In contrast, Stable Diffusion's open-source nature makes it highly accessible and cost-effective for a wide range of users. The base Stable Diffusion model is completely free to download and use locally on your own hardware.

However, running Stable Diffusion locally requires a capable GPU (ideally with at least 4GB of VRAM) and some technical knowledge for setup and configuration. This can present a barrier for users without access to suitable hardware or technical expertise.

To address this, Stability AI offers online services like DreamStudio, which provides a user-friendly web interface for generating images using Stable Diffusion. DreamStudio operates on a credit-based system, with $10 granting 1,000 credits for image generation. New users also receive 25 free credits to get started.

Additionally, various third-party platforms and services have emerged, offering Stable Diffusion access through web interfaces or cloud-based solutions, often with free and paid tiers to cater to different user needs.

Midjourney vs. Stable Diffusion: Use Cases and Target Audiences

Midjourney shines in artistic and creative applications where a unique, stylized visual aesthetic is desired. Its ability to generate highly detailed, imaginative, and almost painterly images makes it an excellent tool for:

- Concept art and illustration.

- Creative projects like book covers, album artwork, etc.

- Artistic exploration and experimentation.

- Mood boards and visual inspiration.

Midjourney's user-friendly interface and streamlined experience cater well to artists, designers, and creatives who prioritize ease of use and a focus on artistic output over extensive technical customization.

On the other hand, Stable Diffusion's versatility and advanced features make it well-suited for a wide range of professional and commercial applications that require precise, photorealistic renderings or specialized customization:

- Product visualization and marketing materials

- Architectural renderings and interior design.

- Scientific and technical illustrations.

- Digital art and creative projects with specific style requirements.

- Research and development in AI and computer vision.

Stable Diffusion's open-source nature and extensive customization options appeal to technical users, researchers, and professionals who value control, flexibility, and the ability to fine-tune the model for their specific needs.

Additionally, Stable Diffusion's cost-effectiveness and accessibility make it an attractive option for independent creators, small businesses, or hobbyists who may not have the budget for paid tools like Midjourney but still require high-quality image generation capabilities.

Future Developments and Trends for Both Midjourney and Stable Diffusion

The field of AI image generation is rapidly evolving, with new advancements and capabilities emerging at a breakneck pace. Both Midjourney and Stable Diffusion are at the forefront of this revolution, continuously pushing the boundaries of what's possible with text-to-image AI.

One of the primary areas of focus is enhancing image quality and resolution. While current outputs are already impressive, researchers are working on techniques to generate even higher-fidelity images with greater detail and clarity, potentially rivaling or surpassing professional photography and artwork.

Another exciting development is the potential for real-time, interactive image generation. Instead of generating static images, future iterations could allow users to dynamically manipulate and evolve images in real-time, enabling a more intuitive and creative experience.

AI image generators are also expected to integrate with other AI modalities, such as natural language processing, speech recognition, and video generation. This could lead to multimodal AI systems capable of understanding and generating content across various domains, opening up new possibilities for creative expression and communication.

As the technology matures, we can expect to see the development of specialized models tailored for specific domains or applications. For instance, models optimized for medical imaging, architectural visualization, or scientific illustration could revolutionize their respective fields.

Ultimately, the future of AI image generation promises to be an exciting and transformative journey, with Midjourney and Stable Diffusion leading the charge in democratizing creative expression and redefining the boundaries of visual art and media production.