6 Ways to Fix CUDA out of Memory in Stable Diffusion

Updated on

CUDA out of Memory error in Stable Diffusion occurs when you try to generate a higher-res AI image on a low VRAM video card. To avoid the issue, we tested and found out 6 workable ways for various scenarios.

Among them, the most efficient and easiest way: Method 1 – is to generate AI images at a lower resolution, while upscaling later in a dedicated AI image upscaler.

It can happen in Stable Diffusion in various scenarios:

- Using txt2img at 768/1024 or higher-res

- Enabling highres.fix at 2X or higher

- Using Img2img at 768/1024 or higher-res

- Training checkpoint models

What is CUDA?

CUDA®, short for Compute Unified Device Architecture, is a parallel computing framework and programming model created by NVIDIA. In plain words, it leverages the capabilities of GPUs to solve problems really quickly by speeding up big calculations.

A memory error happens when the project is too complex for the GPU's memory to handle. Generating images at higher resolution in Stable Diffusion is computing-intensive, and can lead to CUDA out of memory errors on low VRAM computers. To bypass the error, follow the methods below.

1. Set a Lower Resolution and Upscale Later

Cannot use Highres.fix even for X2? Img2img fails above 768x512? Don't worry, the quickest way to fix CUDA out of memory issue is to lower the resolution (width and height) in Stable Diffusion and use a dedicated AI Image Upscaler to increase the resolution by 2X, 4X, 8X and higher after generation.

Many people may doubt that, if they use Stable Diffusion to generate images at low-res only, such as 512x512 or 768x768, is there enough pixels for a standalone AI Image Upscaler to enhance the image to 4K/8K with details kept?

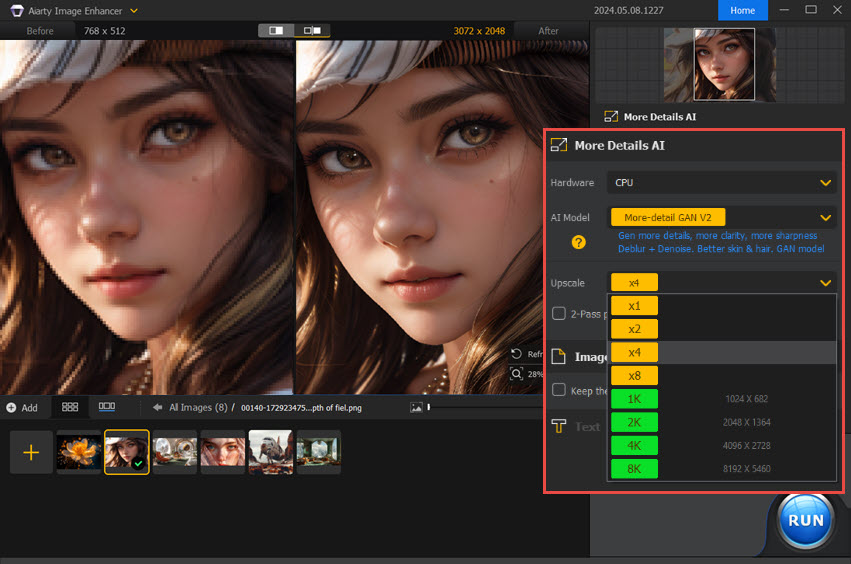

The answer is yes with Aiarty Image Enhancer as shown in the screenshots below.

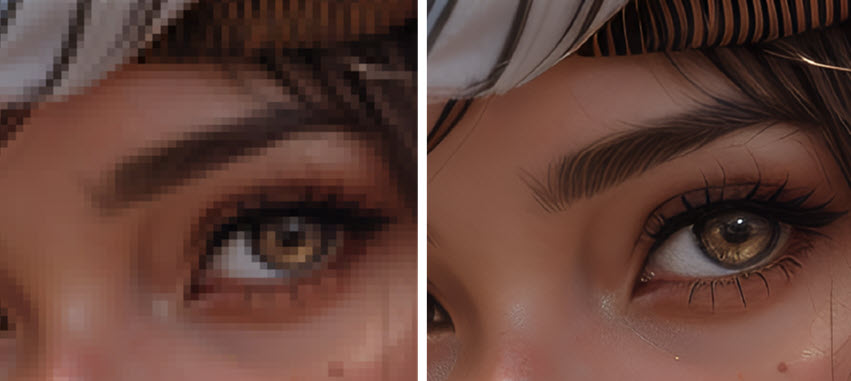

The AI-generated girl on the left is a Stable Diffusion output of 768x512, and on the right is the AI image upscaled by 4 times (3072x2048) by Aiarty Image Enhancer. The difference is stunning when we zoom in on a specific part for closer examination. Check the details of eyelashes and eyebrows below.

Aiarty Image Enhancer is a standalone Image upscaler and enhancement tool powered by 3 AI models. The models learned from 6.78 million trained images and are optimized for AI art, photos and web images.

The best part is, Aiarty Image Enhancer can generate realistic and natural new details upon upscaling.

It is optimized for Nvidia/AMD/Intel/CPU and runs with low VRAM. Even if you only have 4GB VRAM, you can upscale images in Aiarty Image Enhancer, without encountering CUDA out of memory errors. You can use Aiarty Image Enhancer as the best companion for Stable Diffusion, upscaling to large resolution in batch in faster speed and better quality.

Download Aiarty Image Enhancer as the best upscaler for Stable Diffusion at low VRAM.

2. Set Max Split Size at a Smaller Value

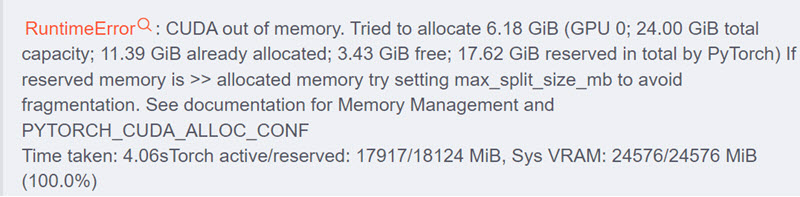

This method works for situations when fragmentation of VRAM leads to CUDA out of memory error. We can set the max_split_size_mb of PYTORCH_CUDA_ALLOC_CONF to a smaller value.

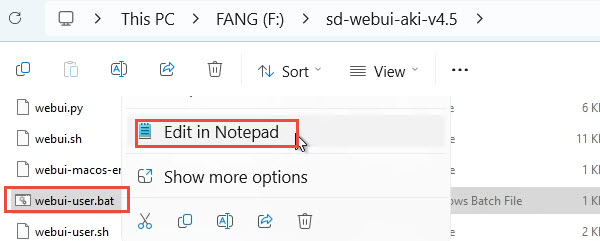

Step 1. Go to the Stable Diffusion WebUI folder.

Step 2. Edit webui-user.bat in any text editor.

For instance, you can right-click and edit it in Notepad or other text editors.

If you don't see the .bat extension, it is probable that you have set your system to hide file extension. Just look for webui-user file that are Windows Batch File.

You can set the file explorer to show file extension in the view tab.

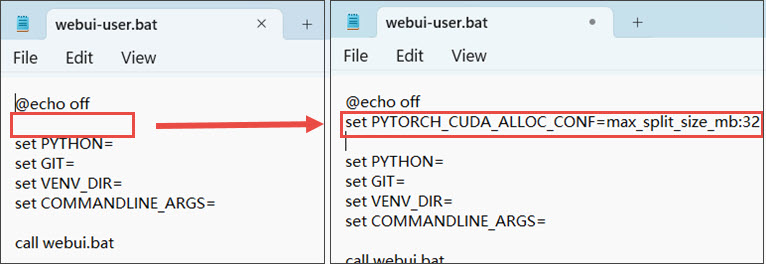

Step 3. Set the max split size according to your situation.

Copy and paste the following content under @echo off as shown in the screenshot.

set PYTORCH_CUDA_ALLOC_CONF=max_split_size_mb:32

Note: Read the error message and change 32 to a value that's workable for your hardware.

For instance, for the error message shown above: notice that reserved - allocated = 17.62 GiB - 11.39 GiB = 6.23 GiB> 6.18 GiB, while free = 3.43 GiB, which matches the message "reserved memory is >> allocated memory." There's enough memory available, but due to fragmentation, it cannot be allocated.

According to Pytorch documentation on memory management: "max_split_size_mb prevents the native allocator from splitting blocks larger than this size (in MB). This can reduce fragmentation and may allow some borderline workloads to complete without running out of memory."

Therefore, we can set a value lower than 6.18GiB (in MB). 6*1024=6114.

set PYTORCH_CUDA_ALLOC_CONF=max_split_size_mb:6114

You can also add a garbage collection threshold as shown below:

PYTORCH_CUDA_ALLOC_CONF=garbage_collection_threshold:0.9,max_split_size_mb:512

Note: Set 512 to a value that works for your specific hardware.

If your Stable Diffusion WebUI crashes, you can try reducing the garbage_collection_threshold parameter, but it is recommended not to set it lower than 0.6.

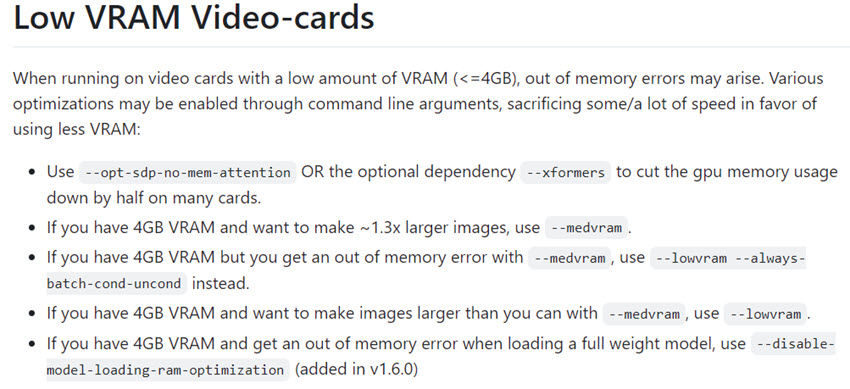

3. Set to Use Lower VRAM

You can open the webui-user.bat file and add the --medvram or --lowvram parameter after COMMANDLINE_ARGS to reduce VRAM usage.

This will allow the Stable Diffusion WebUI to use less VRAM, thereby reducing the likelihood of CUDA out-of-memory errors.

Note: On Linux, you add it in webui-user.sh file.

For detailed information, you can check the wiki to troubleshoot low VRAM videocards, which is written by the author of Stable Diffusion Automatic1111 WebUI.

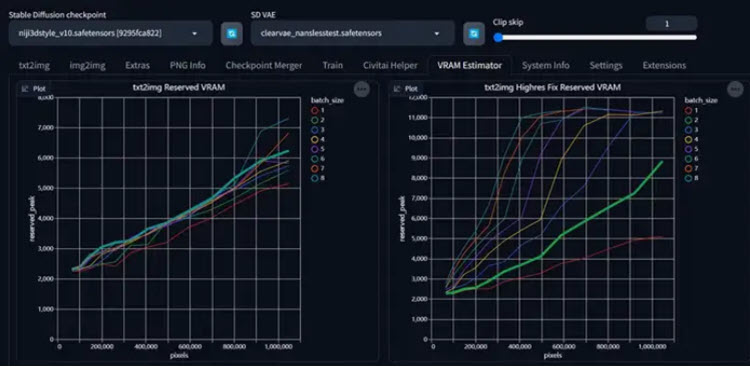

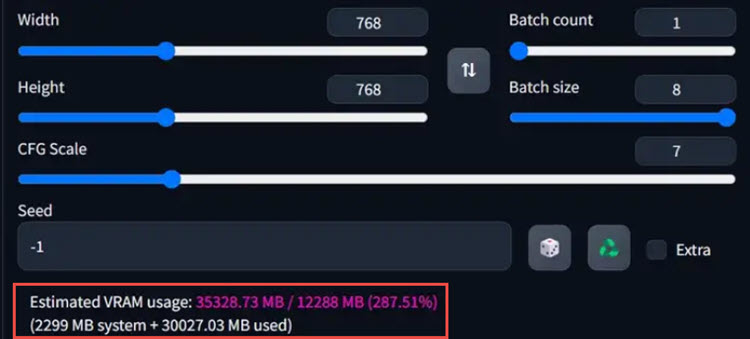

4. Use VRAM Estimator Extension

Another way is to use the VRAM-ESTIMATOR extension. You can install it directly from the Extensions panel in the WebUI.

Step 1. Install VRAM Estimator extension.

https://github.com/space-nuko/a1111-stable-diffusion-webui-vram-estimator

Step 2. Run the benchmark.

Once installed, a VRAM Estimator (VRAM Estimation) panel will appear in your SD WebUI. You need to run the benchmark first.

Depending on your VRAM size, this process can take anywhere from several minutes to a few hours.

If your VRAM is greater than 16GB, it's recommended to increase the maximum image size to 2048 and also increase the Max Batch Count to 16. This will help determine the actual performance limits of your GPU.

Notes:

You only need to do this once, but if you change your GPU, upgrade PyTorch, or there's a major update to WebUI, such as a new accelerator, it's best to run it again.

After the benchmark is complete, whenever you change parameters, this plugin will estimate the VRAM usage for each task and conveniently mark it with different colors, helping you avoid VRAM overflow in 99% of cases.

The plugin works for all hardware configurations, and you can even use it on a Mac.

5. SD WebUI Memory Release

Sometimes we may still find that the VRAM is continuously occupied. This is mainly due to the garbage collection mechanism of Python, which is an inherent flaw.

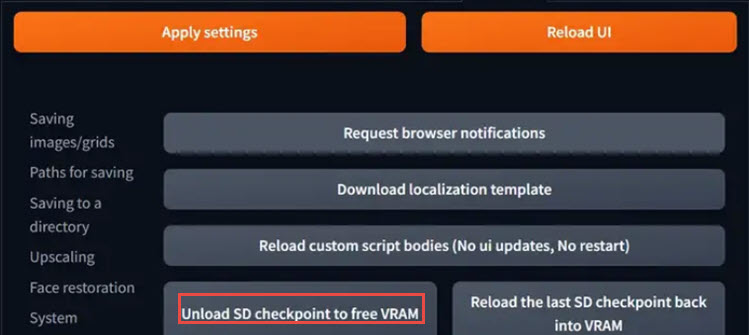

Previously, the only fix was to restart the SD WebUI or even the system. Now you can manually release VRAM using the "Unload SD checkpoint to free VRAM" option in Settings.

If you have configured the parameter in previous methods, you will probably not need to manually release VRAM.

Tips:

Alternatively, you can also use the SD WebUI memory release tool.

https://github.com/Haoming02/sd-webui-memory-release

This extension for Automatic1111 Webui can release the memory for each generation.

6. Use Google Colab for Deployment

If you are still unable to fix the CUDA out of memory error, you might consider using Google Colab as an alternative.

Google Colab is a cloud-based notebook environment that offers GPU resources for users. You can run Stable Diffusion on Google Colab to avoid low VRAM issues.

Among the six methods, method 1 is the easiest way to go. It doesn't require any skills and can save you hours of effort with clear AI images.

Method 4 and 5 won't require you to mess up with system files in Stable Diffusion. Method 2 and 3 work if you experiment and find the suitable value to set for your computer.

Method 6 is an alternative way to use Stable Diffusion online, instead of locally, thus taking advantage of Google's cloud computing.